If any industry is evolving quickly at the moment, it’s data engineering. Although the industry has always moved swiftly, it is currently going through a metamorphosis unlike anything seen in the past due to advances in AI and a changing landscape of top data engineering tools and technology. It might be intimidating for a data expert to navigate this always-changing environment. What ought to be your main concern? How do you make sure you’re staying ahead of the curve rather than merely keeping up?

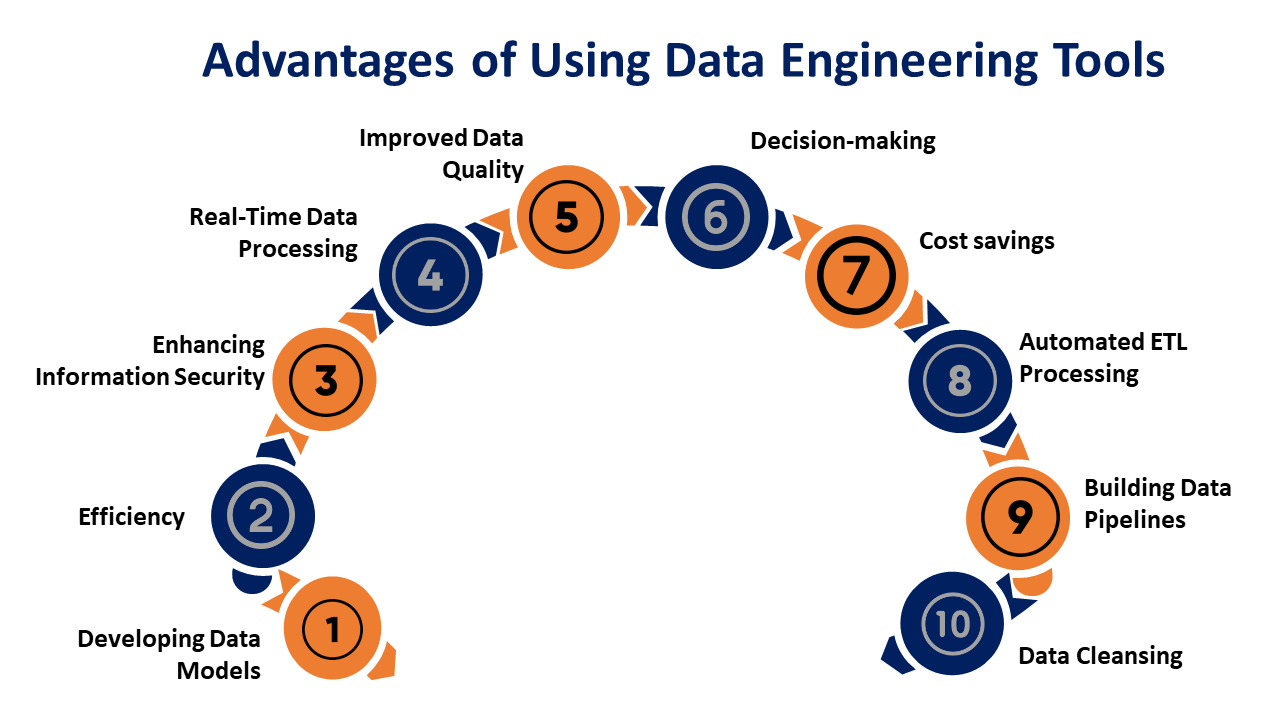

The link between unprocessed data and useful insights is created by top data engineering tools. Information on client interactions, transactions, and social media activity is continuously thrown at businesses. There is a ton of opportunity in this data flood to find insightful information, streamline processes, and make wise decisions. Nonetheless, there is a vast amount of unrealized potential in raw data kept in isolated systems.

With the use of these technologies, data engineers may convert unprocessed data into a format that is easily accessed and used for analysis and strategic decision-making. Top data engineering tools are vital assets for any organization since they streamline the processes of data ingestion, translation, and management.

Role of Data Engineering

The amount of data being created every day is estimated to be 328.77 million gigabytes. This is a startling amount given the state of data proliferation. Surprisingly, in just the last two years, 90% of the world’s data has appeared. This indicates an exponential increase in the amount of information generated. According to predictions, data creation is expected to surpass 181 zettabytes by 2025. This is a noteworthy 150% growth from 2023.

For companies looking to thrive in today’s fiercely competitive world, raw data is an invaluable resource. It plays an important role in comprehending market trends, improving internal operations, and reaching well-informed judgments. Herein lies the role of data engineering solutions. It is the cornerstone of effective artificial intelligence, business intelligence, and data analysis systems.

So, what is data engineering? The field of data engineering solutions is devoted to planning, constructing, and managing systems. It includes the infrastructure required to efficiently manage massive amounts of data. Data gathering, storing, processing, integrating, and analyzing are all part of data engineering services. By converting unprocessed data into useful knowledge, top data engineering tools enable businesses to accomplish their strategic goals and make wise judgments.

Impact of Data Engineering

Data engineering has a truly revolutionary effect on businesses

Well-informed decision-making: Data engineering services enable organizations to make data-driven decisions by organizing and making data easily accessible. This might be everything from simplifying product development based on actual user information to enhancing marketing strategies.

Enhanced productivity: Data engineering platforms free up important time and resources for other crucial tasks by automating laborious operations like data collection and transformation. Workflows that are more efficiently run also save money.

Improved innovation: Data engineering services make it possible to find hidden trends and patterns in data. This gives companies the ability to innovate by spotting untapped markets and creating data-driven solutions.

Are You Suffering From Data Challenges? Let’s Solve Them Together With Our Services

Top Data Engineering Tools of 2024

These are a few of the many tools used by data engineers for various tasks, including data processing and analytics. Thus, the following list includes some of the top data engineering tools that will be in use in 2024:

1. Python

Python features an easy-to-understand syntax that facilitates learning and increases comprehension. With the top data engineering tools, developers of different skill levels can quickly produce robust solutions and prototypes across several disciplines. Python is supported by a large number of libraries, including those for web development, machine learning, data analysis, and frameworks that make coding jobs more efficient. Its cross-platform portability also makes it appropriate for usage on a range of operating systems, which makes it a great option as the main programming language needed for several projects in a variety of industries.

Due to its interpreted nature and Global Interpreter Lock (GIL), which restricts its performance capabilities, Python may provide difficulties for high-concurrency scenarios or performance-critical applications. Moreover, maintaining package versions and dependencies with great care is necessary to guarantee compatibility and prevent conflicts in Python applications, which may be a laborious task.

Use Case for Data Engineering

For retail businesses, Python can be used to extract sales data from various sources. Including databases and APIs, perform transformations using libraries like Pandas to clean and manipulate the data. It then stores the data in a centralized warehouse or storage system.

2. SQL

One of the data engineering technologies for data engineers is SQL or Structured Query Language. This has both benefits and drawbacks when it comes to database management and querying. Positively, massive datasets can be quickly retrieved and altered thanks to SQL’s faster query processing. Its simple syntax, which includes fundamental phrases like SELECT and INSERT INTO, simplifies data retrieval without requiring a high level of coding knowledge. Because of its long history, copious documentation, and standardized language, SQL promotes global standardization in database management.

SQL’s built-in data integrity restrictions ensure accuracy and prevent duplication, while its portability between platforms and integration into a variety of applications further augment its versatility and accessibility. For instance, top data engineering companies can streamline operational reporting, analysis, and automation capabilities by exporting Jira data to multiple SQL Databases, such as PostgreSQL, Microsoft SQL Server, MySQL, MariaDB, Oracle, and H2, without the need for coding, by using SQL Connector for Jira.

Despite being a popular language of choice for top data engineering companies for data administration, SQL can have a complicated interface and may have additional expenditures. In addition, these databases must be tuned for real-time analytics, and as the amount of data being stored grows over time, hardware changes can be required.

Use Case for Data Engineering

In situations like maintaining transaction data in financial systems, SQL is helpful. With the help of features like constraints and transactions, organizations can create relational database schemas that effectively store and retrieve structured data while guaranteeing data consistency and integrity. SQL makes it easier for data to be ingested from a variety of sources, allowing transaction data to be transformed and loaded into database tables. Sensitive financial data is protected by strong security measures, and users may derive actionable insights through sophisticated analysis and reporting because of its robust querying capabilities.

3. PostgreSQL

One of the top data engineering tools originally known as POSTGRES, PostgreSQL is a robust object-relational database management system (ORDBMS). It is available as one of the open-source ETL tools for data engineers. It is well-known for both its extensive feature set and standard compliance. Notably, it meets a variety of data modeling demands by providing a wide range of data types, including sophisticated choices like arrays, JSON, and geometric forms. PostgreSQL also guarantees transactional stability by adhering to the ACID principles, and it uses Multi-Version Concurrency Control (MVCC) to allow high concurrency without sacrificing data integrity. Sophisticated querying features, like window functions and recursive query support, enable users to effectively carry out intricate data manipulations. PostgreSQL’s indexing strategies—which include hash, GiST, and B-tree—also improve query performance, which is another reason why heavy workloads favor it.

Even with its advantages, PostgreSQL is not without its drawbacks. Performance problems and less-than-ideal CPU utilization might arise from PostgreSQL’s interpretive SQL engines, particularly when dealing with intricate and CPU-intensive queries. Moreover, compared to other databases, its implementation can take longer, which could delay the project’s time to market. Although PostgreSQL performs well with large-scale applications and has alternatives for horizontal scalability, its performance in cases involving a high volume of readings may be impacted by its somewhat slower reading speeds.

Use Case for Data Engineering

A retail business that wants to effectively manage and analyze its sales data to learn more about consumer behavior, product performance, and market trends will find PostgreSQL useful. Relational database schemas can be created using PostgreSQL to hold a variety of sales data elements, such as customer profiles, product specifications, transaction histories, and sales channels. With the right indexing and normalization to guarantee data integrity, the schema can be streamlined for effective querying and analysis.

Partner With Us To Find An Innovative Solution To Seize The Data Advantage

4. BigQuery

BigQuery is a serverless data warehouse solution from Google Cloud. We can distinguish it by its scalable architecture and machine learning integration. It has real-time analytics capabilities, SQL interface, and support for geospatial analysis. These features all work together to simplify data analysis procedures and promote collaboration between projects and organizations while upholding robust data security. This top data engineering tool also provides outstanding performance, an affordable pay-as-you-go pricing plan, smooth connectivity with other Google Cloud services, and even more with third-party plugins. To improve data management, for example, non-technical users can load Jira data into BigQuery using the BigQuery Connector for Jira.

The learning curve involved in mastering particular BigQuery features, cost management considerations to avoid unforeseen expenses, limited customization options compared to self-managed solutions, and potential data egress costs when transferring data out of BigQuery to other services or locations are just a few of the potential drawbacks that users should be aware of. These issues highlight the significance of careful optimization and management practices to maximize BigQuery’s benefits and minimize its drawbacks.

Use Case for Data Engineering

A telecoms company looking to analyze client data to improve customer experience, optimize service offerings, and lower churn rates may find BigQuery, one of the best data engineering tools, useful. BigQuery is integrated with multiple customer data sources, such as call logs, internet usage logs, billing data, and customer support interactions. It may entail bulk uploads from billing systems, real-time data streaming from call centers, and API linkages with other service providers.

5. Tableau

Renowned for its drag-and-drop functionality, real analysis capabilities, collaboration tools, mobile compatibility, and sophisticated visualization techniques, Tableau is a top data engineering tool. It is well-known for being simple to use, having the capacity to combine data, having strong community support, being flexible in customization, and being scalable to handle big datasets. Its many possibilities for data communication are its greatest asset. For example, you can quickly retrieve data from Jira, ServiceNow, Shopify, Zendesk, and Monday.com using Alpha Serve’s enterprise-grade Tableau data connections.

Even though it’s one of the top data engineering tools available, this solution has a steep learning curve for advanced features, is expensive, may cause performance problems with large, complicated datasets, and has limited data pretreatment capabilities. This data engineer manager is still in demand by businesses looking for strong data visualization solutions across a range of industries, despite these disadvantages.

Use Case for Data Engineering

A strong data engineering framework is provided by the Tableau Connector for Zendesk integration for evaluating and improving customer support performance. An artificial intelligence development company can obtain a thorough understanding of customer service performance, facilitating data-driven decision-making and ongoing development. The role of data engineers and analysts can save time and effort by automating operations related to data extraction and integration with Zendesk Connector. Furthermore, real-time monitoring features guarantee that businesses address customer care concerns quickly, enhancing client retention and happiness.

6. Looker Studios

Google’s free top data engineering tools Looker Studio, formerly known as Google Data Studio, is a visualization and reporting tool with capabilities like real-time data access, dashboard customization, collaboration, and simple report sharing and publishing. Cost-effectiveness, easy interaction with Google goods, an intuitive interface, customization possibilities, and cloud accessibility are some of its benefits.

But Looker Studio can be difficult to use for handling large datasets or complicated data transformations; it doesn’t have some of the advanced data analytics solutions features available in tools that cost money. It can run slowly for larger reports or certain types of data, and it might require some getting used to before you can fully utilize all of its features. Because of this, to properly address its analytical limitations, organizations with more complex data analysis requirements might need to augment it with additional tools. Using third-party connectors, for instance.

Use Case for Data Engineering

Looker Studio can be very helpful to a software development company that wants to increase the efficacy and efficiency of project management. With the help of the Looker Studio Connector for Jira, project-related data from Jira, such as task status, sprint metrics, team performance, and issue resolution times, can be exported and imported into Looker Studio. Data engineers produce dynamic dashboards and reports that offer thorough insights into project management performance using Looker Studio’s user-friendly interface. Metrics like sprint velocity, team productivity, issue backlog trends, and project completion rates can all be found on these dashboards.

7. BI Power

A data visualization tool, Power BI, provides a set of top data engineering tools. This includes sharing, aggregating, analyzing, and visualizing data. The features include:

- -Versatile data connectivity,

- -interactive visualizations,

- -strong modeling and data transformation capabilities,

- -AI support for insights,

- -customization choices, and

- -collaboration tools

Benefits include

- -scalability,

- -robust security,

- -frequent updates,

- -easy interface maintenance, and

- -seamless integration with Microsoft products

Nevertheless, there are several drawbacks, such as the free version’s limited data refresh, the learning curve for advanced features, subscription costs for the Pro and Premium versions, possible performance problems with large datasets, and reliance on other Microsoft products for integration. Fortunately, Power BI Connectors can help to lessen these drawbacks.

Use Case for Data Engineering

Power BI has become one of the powerful top data engineering tools for financial performance analysis with the help of Power BI Connector for QuickBooks. After integration, data engineers using Power BI solutions can produce dynamic dashboards and reports that include:

- -metrics such as cash flow forecasts,

- -expense breakdowns,

- -revenue trends, and

- -profitability analyses

Furthermore, the actual data retrieval capabilities of Power BI Connector for QuickBooks allow for real-time KPI monitoring, making it possible to spot financial trends and anomalies right away.

8. MongoDB

MongoDB is a popular cross-platform document-oriented database that is notable for its flexible schema design, segmentation-based scalability, optimized performance, strong query language, strong community support, and flexible deployment options.

Benefits include:

- -optimized performance for fast data access,

- -smooth scaling with sharding,

- -flexible schemas for changing data needs,

- -strong community and ecosystem support, and

- -extensive security features.

Nevertheless, MongoDB’s shortcomings as a top data engineering tool include its inability to support joins and complex transactions, data integrity issues brought on by a variety of data types, possible complexity in the schema design, higher memory consumption due to data redundancy, and document size restrictions.

Use Case for Data Engineering

To personalize user experiences and increase engagement, social media platforms may find that MongoDB can assist in the analysis and processing of ongoing user interactions, such as likes, comments, and shares. It can use change streams or aggregation pipelines to store and transform data about user interactions. The platform can quickly gain insights from user interactions thanks to MongoDB’s real-time data ingestion and processing feature. It results in more timely and relevant user experiences. The platform can manage increasing volumes of user data and traffic loads without compromising performance thanks to its horizontal scalability and sharding capabilities.

9. Apache Spark

With its remarkable speed and performance, versatility across multiple data engineering software, resilience through RDDs for fault tolerance, extensive libraries, thorough documentation, and strong community support, Apache Spark is an open-source framework that is well-known for big data processing and AI & ML. Pros include advanced analytics capabilities and faster access to big data workloads.

Despite its benefits, Apache Spark has drawbacks, including the requirement for manual optimization. It lacks automatic optimization procedures, possible financial issues brought on by the high memory requirements for in-memory computation, and a restriction on real-time processing because micro-batch processing is the only method available.

Use Case for Data Engineering

Consider a retail business that is preparing to use real-time consumer behavior analysis. This is to tailor advertising campaigns and improve inventory control. Apache Spark can come in handy to ingest streaming data from a variety of sources. Including social media interactions, transaction logs, and website clickstreams, using its Spark Streaming module. Then, using Spark’s robust libraries and APIs, the data is transformed. Real-time data analytics solutions, such as determining popular products, forecasting demand, and spotting fraud or odd activity, are made possible by Apache Spark. These insights can also be used to initiate continuous, automated processes, like dynamically adjusting inventory levels, sending customers personalized offers based on their browsing history, or flagging suspicious transactions for additional inquiry.

10. Kafka the Apache

Scalability, resilience, and real-time processing are three areas where Apache Kafka shines. Due to its distributed architecture, low latency and high throughput are guaranteed by allowing for parallel data processing across brokers. Kafka’s replication mechanism ensures message durability and guards against data loss, and its scalability makes it possible to add servers seamlessly and handle increasing data volumes without experiencing any downtime.

Furthermore, Kafka’s functionality is enhanced by its ability to integrate with data engineering technologies such as Apache Spark and Elasticsearch, and it is supported by a sizable user community for continued development and maintenance. Notwithstanding its benefits, Apache Kafka has certain drawbacks, including a steep learning curve for users, resource consumption, complexity in setup and operation, and security issues.

Use Case for Data Engineering

One application of Apache Kafka, the top data engineering tool, is the real-time detection of fraudulent activity to prevent financial losses and defend the assets of a financial institution’s clients. Large amounts of transaction data from online payments, credit card transactions, ATM withdrawals, and banking transactions will be ingested by this tool. Because of its event-driven architecture, events can be processed smoothly as they happen, enabling prompt detection and action in response to suspicious activity. Kafka’s resilience guarantees message continuity, averting data loss even in the event of system malfunctions. Lastly, it easily integrates with analytical tools like Elasticsearch and Apache Spark to further analyze data visualization of fraud patterns that have been found.

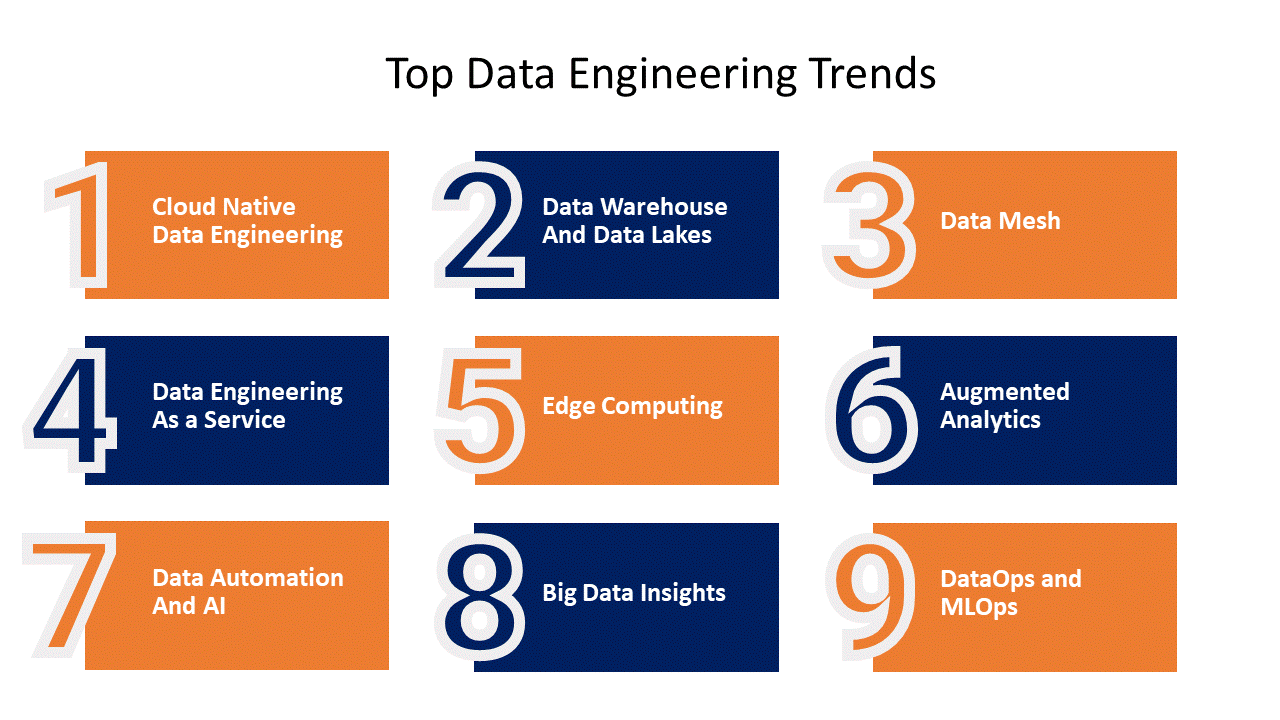

Future of Data Engineering

1. Intelligent Automation and AI-Powered Procedures

Data engineering automation is expected to progress further, with AI & ML playing key roles. Data quality checks and transformation logic are only two examples of the sophisticated decision-making procedures that AI-driven top data engineering tools may automate within data workflows. These developments will result in far less human labor and error-prone data pipelines in the big data industry that are more dependable and efficient.

2. Processing and Streaming Data in Real-Time

Technological developments in data streaming and processing are being driven by real-time data insights. Real-time analytics is now possible. By developing data engineering technologies like Apache Kafka, Apache Flink, and different cloud-native services. These offer use cases ranging from immediate fraud detection to dynamic pricing models.

3. Decentralized and Data Mesh Architectures

A domain-oriented decentralized approach to data management is promoted by the data mesh architectural paradigm. It focuses on decentralizing data ownership and governance. This idea promotes the treatment of data as a product, with distinct ownership and lifecycle management, to improve control, quality, and accessibility for large enterprises.

4. Improved Frameworks for Data Governance and Quality

Strong data governance and quality frameworks will be more and more crucial as the big data industry gets more complicated. More advanced procedures and technologies will be used by organizations to guarantee data security, consistency, and accuracy. It also includes compliance with laws like the CCPA and GDPR. This emphasis will contribute to the development of data and analytics outcomes trust.

5. Data engineering native to the cloud

The use of cloud-based ETL tools is revolutionizing the field of data engineering. Cloud Native provides scalability, flexibility, and cost-effectiveness. These help businesses quickly adjust to changing data requirements. One interesting development in this area is the incorporation of serverless computing into data pipelines for on-demand processing.

6. IoT and Edge Computing Information Technology

As the number of IoT devices rises, edge computing is emerging as a key field in data engineering. Applications can be made more responsive and context-aware by processing data closer to its source. This lowers latency and bandwidth consumption. To effectively manage and analyze ETL processes across remote networks, data engineering will need to change.

7. Integration of DataOps and MLOps

The merging of MLOps and DataOps approaches is becoming more popular. With an emphasis on enhancing the coordination between operations, data science services, and engineering teams. The goal of this connection is to expedite the delivery of dependable and significant data products. This is done by streamlining the entire lifecycle of data and machine learning models, from development to deployment and monitoring.

8. Technologies that Promote Privacy

Data engineering solutions will use more privacy-enhancing technologies (PETs) as privacy concerns increase. Organizations will be able to use data while maintaining individual privacy. This is thanks to strategies like federated learning, safe multi-party computation, and differential privacy.

9. Processing Graph Data

Applications like social networks, fraud detection, and recommendation systems that demand complex relationship analysis are driving the growing use of graph databases and processing frameworks. There will be greater integration of graph processing skills into platforms and top data engineering tools.

10. Integration of Data Across Platforms

Further developments in cross-platform data integration technologies, which provide smooth data integration and mobility across many settings, including different clouds and on-premises, are probably in store for the field of data engineering services in the future. This feature will be essential for businesses using multi-and hybrid-cloud infrastructures.

Explore Our Data Engineering Services to Drive Data Growth

Conclusion

To sum up, top data engineering tools are like the spark that raises the bar for data-driven companies. You may optimize your return on your investment, handle complex datasets, and streamline workflows by putting our carefully chosen selection of the greatest data engineering tools into practice. These excellent solutions make your data a strategic weapon instead of a burden by revealing its hidden possibilities. Obtain a competitive advantage by using statistical analysis to inform data-driven decision-making.

FAQs

-

Does a Data Engineer need Tableau?

Although it’s not a must for a Data Engineer, Tableau is useful for people who share insights and visualize data. It enhances data engineering abilities by making dashboard design interactive and collaborative.

-

Is Informatica a tool used in data engineering?

Indeed, Informatica is a useful tool for data engineers. It is frequently useful for ETL (Extract, Transform, Load) procedures, data integration, and data quality control.

-

Do any open-source or free data engineering tools exist?

Indeed, there are several open-source or free data engineering solutions accessible. Examples include PostgreSQL for database administration, Apache Kafka for real-time data streaming, and Apache Airflow for workflow automation.

-

What characteristics do most data engineering tools share?

Most data engineering tools have the following characteristics:

- -Data integration

- -ETL procedures

- -Workflow automation

- -Real-time data processing

- -Data quality control

- -Support for several data sources and formats.

-

What distinguishes a data engineer from a data scientist?

Data scientists enter the picture after the data process, whereas the role of data engineers comes in early. A data scientist’s extensive understanding of deep learning and machine learning enables them to address certain organizational issues and carry out sophisticated prediction studies. However, a lot of qualitative data is necessary for data scientists to do their analysis work effectively. The importance of data engineers’ roles is due to this very reason.

-

How to differentiate a data engineer from a data analyst?

Data analysts support companies in reaching their goals through better-informed decision-making. All of the data that the data engineer has provided in the data pipeline will be useful.

Better decision-making is possible by the individual’s ability to generate dashboards and reports. This is possible due to easier access to pertinent information.