With the advent of artificial intelligence in technology, the world witnessed a paradigm shift. Open source large language models are a potent tool that revolutionized artificial intelligence at a time when people were beginning to grow comfortable with the idea. Large language models, or LLMs, have revolutionized the application of natural language processing and have aided in more accurate GenAI activities such as text generation, translation, and LLM text summarization.

Large language models became more widely available as the demand for LLMs grew. It is democratizing AI development and fostering an innovative and collaborative culture. There are more than 300 million businesses worldwide as of right now. According to most recent LLM statistics, nearly 58% of businesses utilize generative AI technologies, which depend on LLMs to process human language and create content.

Open-Source Large Language Model (LLM): What is it?

Open source large language models is a sophisticated kind of language model that is developed on enormous volumes of text data using deep learning technology. These models can produce writing that resembles that of a human being and carry out several natural language processing functions.

By way of comparison, the notion of a language model pertains to the idea of allocating probabilities to word sequences through the examination of text corpora. There are many different levels of complexity for language models; firstly basic n-gram models and secondly more advanced neural network models. However, models that employ deep learning methods and incorporate a sizable number of parameters—millions or even billions—are typically referred to as “large language models.” These artificial intelligence (AI) algorithms can generate content that is frequently identical to writing produced by people by grasping intricate linguistic patterns.

Experience The Power of Our Cutting-edge LLM Technology

Open-Source Large Language Model Types

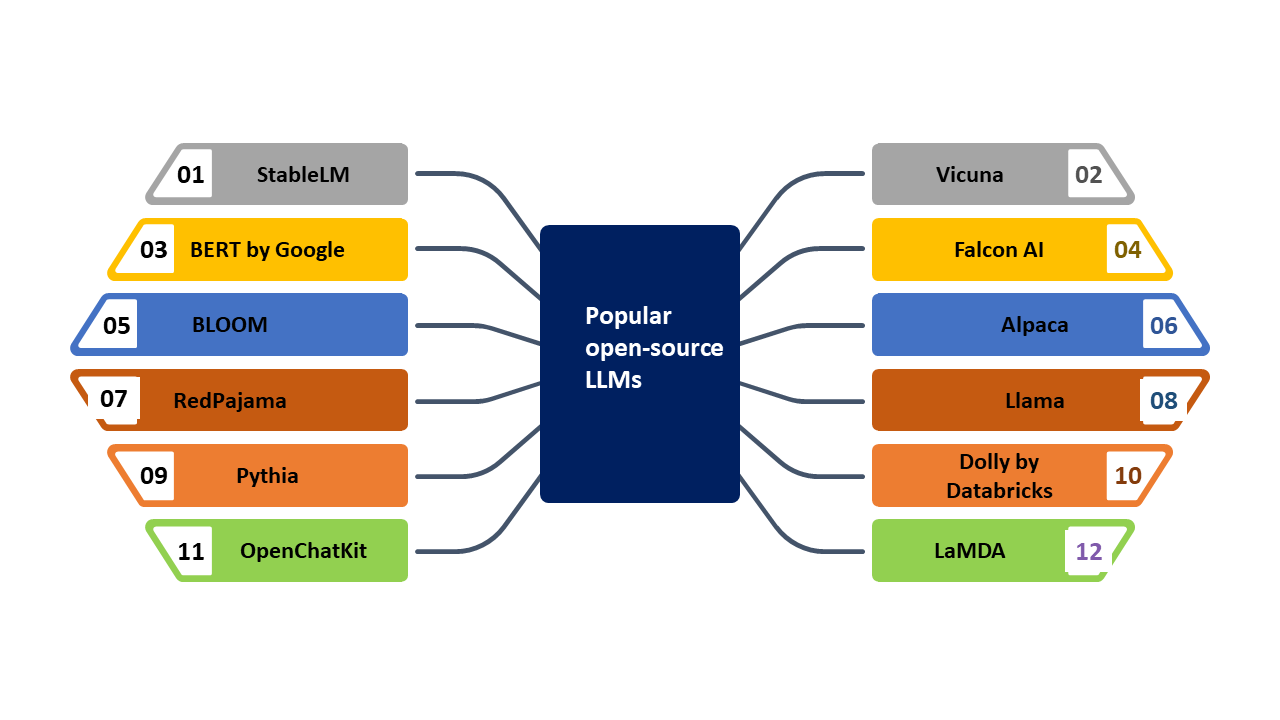

The different kinds of open source large language models that are currently available in the digital world are listed below:

Transformer Models

First modern text generation models and generative AI have come to be associated with cutting edge technology designs such as the GPT (Generative Pre-Trained Transformer) series, which represents transformer-based LLM models. Transformer-based LLM models have revolutionized generative AI applications, encompassing content creation and language translation, by capturing contextual variations and long-range dependencies.

Neural Networks with Recurrence

Second, recurrent Neural Networks (RNNs) continue to be the most significant and influential in the field of language modeling, despite the fact that transformer-based LLM models are the most well-known. Speech recognition and sentiment analysis are two task applications. RNN models like the LSTM (Long Short-Term Memory) network power them.

Bidirectional Encoder Representations from Transformers or BERT

Third, BERT is a bidirectional approach to language understanding. BERT is known as the revolutionary large language model, revolutionizing activities related to natural language processing solutions. BERT takes into account the dataset’s context from both angles, resulting in exceptional performance on a variety of tasks like sentiment analysis and question answering.

How Do You Build a Large Language Model (LLM)?

Known as a “large language model,” a large-scale transformer model is usually too big to run on a single machine and is instead offered as a service via an API or web interface. Large volumes of text data from publications like books, essays, websites, and a variety of other textual materials are used to train these large language models. Through this process of training, the models analyze statistical correlations between words, phrases, and sentences, enabling them to produce coherent and contextually relevant responses to questions or prompts. Additionally, these models can be made more accurate and useful by fine-tuning them using unique datasets to train them for specific applications.

LLM like ChatGPT was trained on a vast volume of online content, which gave it the ability to comprehend a wide range of languages and subjects. It may therefore output text in a variety of styles. Its amazing capabilities—such as text summarization, question answering, and translation—are not surprising considering that these tasks rely on unique “grammars” that correspond with prompts.

How do Open-source LLMs work?

The best large language models are trained and adjusted in two stages, requiring a great deal of pre-training. LLMs can be tailored to a variety of specific objectives by using specialized data sets. Let’s dissect this intricate procedure and look closely at each step:

Prerequisites

Open source LLMs are advanced artificial intelligence models that have been pre-trained on a significant amount of data, sometimes referred to as a corpus, gathered from various sources such as public forums, tutorials, Wikipedia, Github, etc. At this point, LLMs engage in “unsupervised learning,” using unlabeled, unstructured data sets. Trillions of words make up these data sets, which LLMs can comprehend, examine, and relate. Because they contain more data, these unstructured data collections are extremely important. Large language model development knowledge of human language is shaped by the AI algorithm that helps them comprehend semantics and create connections between words and concepts when these data sets are given to them uninstructed.

Fine-Tuning

The next stage is to fine-tune the knowledge that LLMs have gained after receiving unstructured input from various sources. At this point, there is some labeled data available, which LLMs can use to accurately identify and understand various concepts. To put it simply, fine-tuning is the process by which LLMs improve their comprehension of words and ideas in order to maximize their effectiveness on particular NLP-related tasks.

A base-trained open source LLMs can be further developed with specific instructions for a variety of useful uses once it has been reached. As a result, LLMs can be questioned using prompt engineering solutions, and they will then employ AI model inference to react appropriately (e.g., by providing a translated text, sentiment analysis reports, or an answer to a query).

Large Language Models (LLMs) Vs. Generative AI

| Aspect | Large Language Models (LLMs) | Generative AI |

|---|---|---|

| Definition | A subset of generative AI focused on generating textual content based on textual data. | A broad category of AI that generates a wide range of content, including text, images, video, code, and music. |

| Examples | ChatGPT, GPT-3, BERT | DALL-E, Midjourney, Bard, ChatGPT |

| Input Types | Text | Text, images, audio, video, code |

| Output Types | Text (sentences, paragraphs, articles) | Text, images, audio, video, code |

| Training Data | Specialized in large volumes of textual data. | Can be trained on various types of data, including text, images, audio, and video. |

| Specialization | Produces coherent and contextually relevant textual content. | Capable of creating diverse types of content beyond text. |

| Complexity | Advanced understanding and generation of human language, recognizing and generating nuanced text. | Varies widely; can range from simple text generation to complex multimodal content creation. |

| Applications | Writing assistance, summarization, translation, content creation, chatbots. | Image generation, video creation, music composition, art generation, programming assistance, chatbots. |

| Training Techniques | Often involves techniques like transformer architectures, large-scale language modeling, fine-tuning on specific tasks. | Uses various architectures and techniques, including GANs, VAEs, transformers, and others. |

| Versatility | Primarily focused on text but can be adapted to handle text-related tasks across various domains. | Highly versatile, capable of generating content across multiple modalities and domains. |

| Notable Characteristics | High coherence in text generation, understanding of context, ability to generate long-form content. | Creativity in generating novel content, handling multimodal inputs and outputs. |

| Challenges | May produce plausible but incorrect or biased text, requires substantial computational resources for training. | Ethical and copyright issues, quality control across different types of generated content. |

| Future Potential | Enhanced language understanding, improved contextual awareness, more human-like interactions. | Expansion into new creative fields, improved cross-modal generation and integration, broader application scope. |

Why is the importance of Large language models?

LLMs have gained special significance in artificial intelligence in this day and age of digital transformation since they serve as the basic models for the development of broad applications of LLM for many purposes. In addition to teaching AI and machine learning (ML) models of human language, LLMs are highly valuable in fields like healthcare, finance, marketing, and entertainment because they can easily perform complex tasks like sentiment analysis, content summarization, translation, and classification and categorization.

These days, LLMs are important because of their vast parameter sets (think of them as human memory), which allow them to learn by fine-tuning. Since LLMs are built on a transformer model architecture that consists of an encoder and a decoder, they are able to process data by concurrently evaluating the data to determine the relationships between tokens, or words and concepts, and “tokenizing” the input. LLMs are adaptable AI models that can be applied to a variety of LLM use cases and settings. Additionally, they are extensively utilized by companies in a variety of industries because of their accuracy and efficiency.

Kinds Of Open-Source Large Language Model Projects Available

Because of their great versatility, LLMs can be utilized for a variety of NLP-related tasks. These tasks include content translation, which involves LLMs with multilingual training translating text from one language to another; content summary, which involves LLMs condensing lengthy text passages or pages of text for a clearer understanding; and content rewriting, which involves LLMs rewriting text sections in response to instructions, providing a quick and effective way to change content. Furthermore useful applications of LLM are:

Information extraction

LLMs help improve the accuracy and relevance of search results provided by information retrieval systems. A large language model, such as the open AI GPT, for example, searches for pertinent material and summaries it before communicating it to you in a conversational manner each time it is asked. In response to a cue in the form of a question or an instruction, an LLM will carry out information retrieval in this manner.

Sentiment analysis

Like human beings, these AI models are also capable of deciphering the mood or emotion that lies behind words and determining the purpose of a piece of information or a specific reaction. Because of this, LLMs are a good substitute for hiring human agents in major corporations’ customer support departments.

Text production

One excellent example of generative AI that allows users to create new text depending on inputs is ChatGPT, which is a large language model. These models generate textual content pieces after ChatGPT prompts. For example, you may give them an assignment like “Write a tagline for the newly opened supermarket” or “Compose a short coming-of-age story in the style of Louisa May Alcott.”

Code Creation

LLMs are remarkably good at recognizing patterns, which helps them write useful code. Large language model development companies are a great aid when it comes to programming because they can comprehend the coding specifications and help developers build code snippets for various software applications.

Conversational AI and chatbots

Virtual assistants, or chatbots, are conversational AI models. These models can comprehend the tone and context of a discussion and generate empathetic, natural-sounding responses to engage consumers in conversation. These responses sound very similar to a human agent. Chatbots—which come in a variety of shapes and sizes—are a popular way that conversational AI is used to interact with users through a query-and-response mechanism. The most popular LLM-based AI chatbot created with the GPT-3.5 model is OpenAI ChatGPT. Currently, customers can additionally take advantage of the updated GPT-4 LLM for improved performance.

Arrangement and Classification

Large language model development can be applied to conventional ML use cases that involve categorization as well. One can make use of LLMs’ in-context learning capabilities by utilizing Zero-Shot and Few-Shot learning.

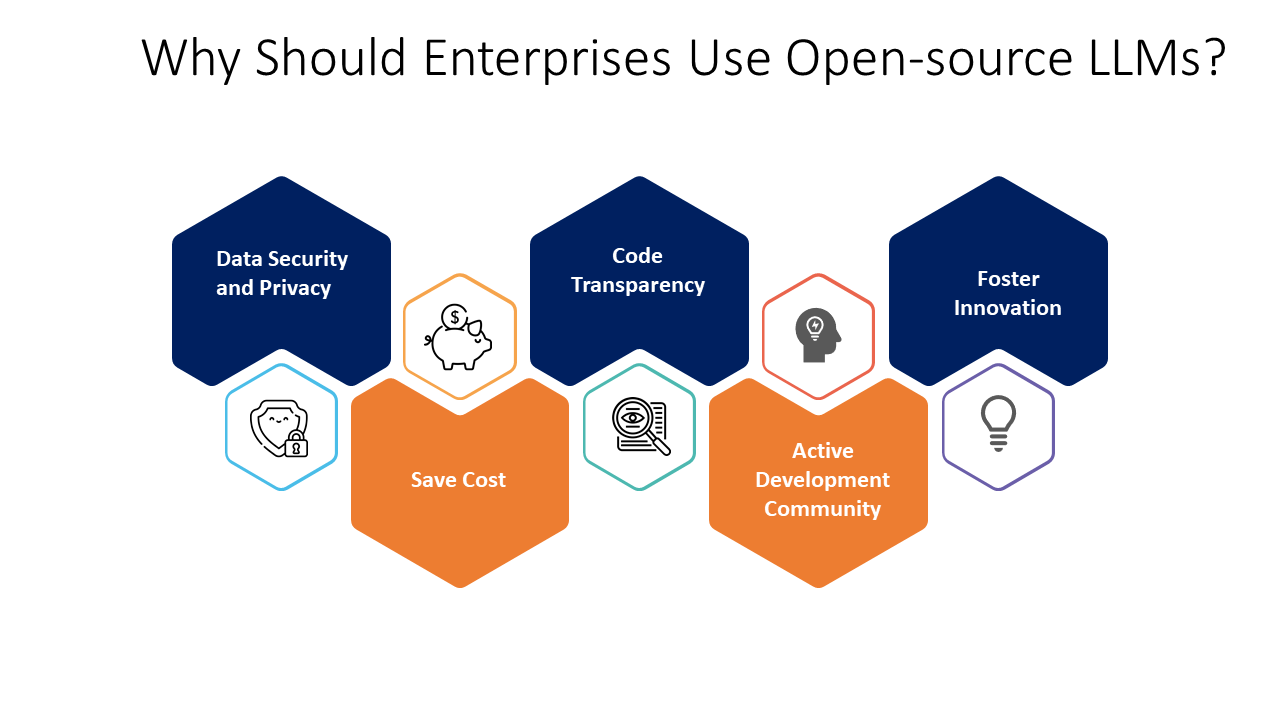

What are the advantages of Open Source LLMs

Bigger LLMs were thought to be better in the past, but businesses are now learning that they might be unaffordable when it comes to research and innovation. Open source large language models started to emerge in response, posing a threat to the LLM business model.

Openness and adaptability

Businesses without access to in-house machine learning expertise can leverage best open source large language models within their own infrastructure, be it on-premises or in the cloud, as they offer transparency and flexibility. As a result, they have complete control over their data and can keep sensitive data on their network. The likelihood of a data breach or unwanted access is decreased by all of this.

Transparency about an open source large language models operation, architecture, training materials, and methods are all provided. Code inspection and algorithm visibility give an organization greater credibility, support audits, and guarantee moral and legal conformity. Furthermore, performance can be improved and latency can be decreased by effectively optimizing open source large language models.

Savings on costs

Since there are no licensing fees associated with them, they are typically far less expensive over time than proprietary LLMs. On the other hand, cloud or on-premises infrastructure expenditures are included in the cost of operating an LLM, and they usually include a large upfront installation cost.

New features and input from the community

Fine-tuning is possible with open source large language models. Businesses can customize the LLM with features that are useful for their particular needs, and they can train the LLMs using particular datasets. Working with a vendor is necessary to make these specs or changes on a proprietary LLM, and it is time- and money-consuming.

An organization can benefit from community contributions, various LLM development companies, and potentially hire AI developers to handle updates, development, maintenance, and support when using an open-source LLM as opposed to a proprietary one, which forces the organization to rely on a single supplier. Businesses can experiment and use contributions from people with different viewpoints thanks to open source. Solutions that enable businesses to remain on the cutting edge of technology may come from that. Businesses that use open source large language models also benefit from increased control over their technology and how they employ it.

Get a Free LLM Consultation Today to Boost Productivity & Drive Innovation

Challenges to Open-Source Large Language Models

The following are a few dangers connected to large language models that are available as open-source:

False information and deception

There is an increase in proficiency of open source large language models in producing text. These texts resemble human language. Therefore, there are worries that these models could be abused to disseminate propaganda, fake news, and false information. Individuals may abuse the models for personal benefit. They can fabricate false information, sway public opinion, and cast doubt on the reliability of different sources of information. As a result, there is a greater need than ever to think through ethical issues, abide by moral standards, and apply content control tools while using huge, open-source large language models.

Security and Privacy Issues

Large-scale, open source large language models rely significantly on massive volumes of data for training, which presents issues with data security and privacy. Sensitive data, such as bank or medical records and private correspondence, may be present in the training datasets. The model’s results put this information at risk, raising data privacy issues in relation to personal confidentiality and privacy. Malware can also be used to exploit weaknesses in model implementation and deployment pipelines, jeopardizing system integrity and providing access to private data.

Fairness and Bias

Large-scale open-source large language models may exacerbate pre existing biases in training data, even despite numerous initiatives to reduce prejudice and advance equity in AI systems. Biases of all kinds, including those based on gender, color, ethnicity, and socioeconomic status, can be found in the model’s output and result in unjust treatment, discrimination, and even harm to society. It is crucial to use bias detection and mitigation approaches along with a diverse training dataset to solve the issue of bias in open-source LLMs. Furthermore, open-source large language models need to encourage accountability and transparency in the model’s creation and assessment.

Problems with Intellectual Property

Large language models that are available as open-source are vulnerable to complications with licensing contracts, intellectual property rights, and usage limitations. Developers and organizations may be more vulnerable to legal issues about ownership of the model architecture, training data, and derived works in open-source projects combined with proprietary methods or datasets. It is crucial for open source large language models and those utilizing AI technologies to get transparent licensing frameworks and collaborative agreements in order to settle intellectual property disputes and provide fair access to these technologies.

Future Implications of LLMs

Large language models (LLMs) like GPT-3 and chatbots like ChatGPT, produce natural language writing. Because minimal deviations from human-written text have garnered particular attention in recent years. An advancement has been made in the field of artificial intelligence using these fundamental concepts. Artificial intelligence together with LLMs have made significant advancements. However there are worries about how these technologies may affect society, the labor economy, and communication.

The potential for LLMs to upend labor markets is one of the main concerns. Over time, large language models will be able to take over human-intensive tasks like drafting legal documents, chatbots for customer service, creating news blogs, etc. People whose employment demands automation may lose their jobs as a result of this.

It’s crucial to remember that LLMs cannot take the place of human labor. They are merely a tool that, through automation, can assist people in becoming more effective and productive at work. Even after task automation, LLMs’ productivity and efficiency will also lead to the creation of new jobs. For instance, companies might be able to produce brand-new goods or services that were previously too costly or time-consuming to develop. They can increase productivity and innovate through process optimization and efficiency gains by utilizing LLMs.

LLMs have the capacity to influence society in a number of ways. Better patient and student outcomes could result from the applications of LLM, for instance, in the creation of individualized education or healthcare plans. Because LLMs are capable of producing insights from extensive data analysis, artificial intelligence solutions company can assist governments and corporations in making better decisions.

Examples of businesses using LLMs that are open-source

Here are some instances of how many businesses from around the world have begun to use open language approaches.

VMware

A well-known company in the digitalization and cloud computing space, VMware, has implemented the HuggingFace StarCoder, an open source large language model. By helping their developers generate code, they want to increase the productivity of their developers through the use of this paradigm.

This calculated action shows how important internal code security is to VMware and how they want to host the model on their servers. It stands in contrast to using an outside system like GitHub’s Copilot, which is owned by Microsoft. This could be because of their codebase’s sensitivity and their want to keep Microsoft out of it.

Brave

In an effort to set itself apart from competitors, Brave, a web browser business that prioritizes security, has implemented an open-source large language model called Mixtral 8x7B from Mistral AI for their conversational assistant dubbed Leo.

Leo was formerly using the Llama 2 model, but Brave modified the helper to use the Mixtral 8x7B model by default. This action demonstrates the organization’s dedication to incorporating open LLM technology to protect user privacy and improve browser functioning.

Gab Wireless

Hugging Face’s open-source large language models are being used by Gab Wireless, a business that specializes on kid-friendly mobile phone services, to fortify its messaging system. The goal is to filter the messages that kids send and receive to make sure that their conversations don’t contain any offensive material. When it comes to ensuring children’s safety and security in their relationships, especially with strangers, Gab Wireless uses open language concepts.

IBM

IBM actively uses open source large language models in a number of its operational domains.

Firstly the AskHR software efficiently answers HR queries by utilizing open language models and IBM’s Watson Orchestration.

Secondly, Advantageous tool for consulting features an open-source large language model library and a “Library of Assistants” to assist consultants, powered by IBM’s Watson platform.

Thirdly, their marketing initiatives use an LLM-driven program that is connected with Adobe Firefly to create creative images and content for marketing purposes.

Intuit

The business that created TurboTax, QuickBooks, and Mailchimp, Intuit, has developed language models that include open LLMs. Additionally these models are essential parts of Intuit Assist, a tool that assists users with a variety of activities, analysis, and customer assistance. The startup uses open-source frameworks enhanced with proprietary data from Intuit to develop these large language models.

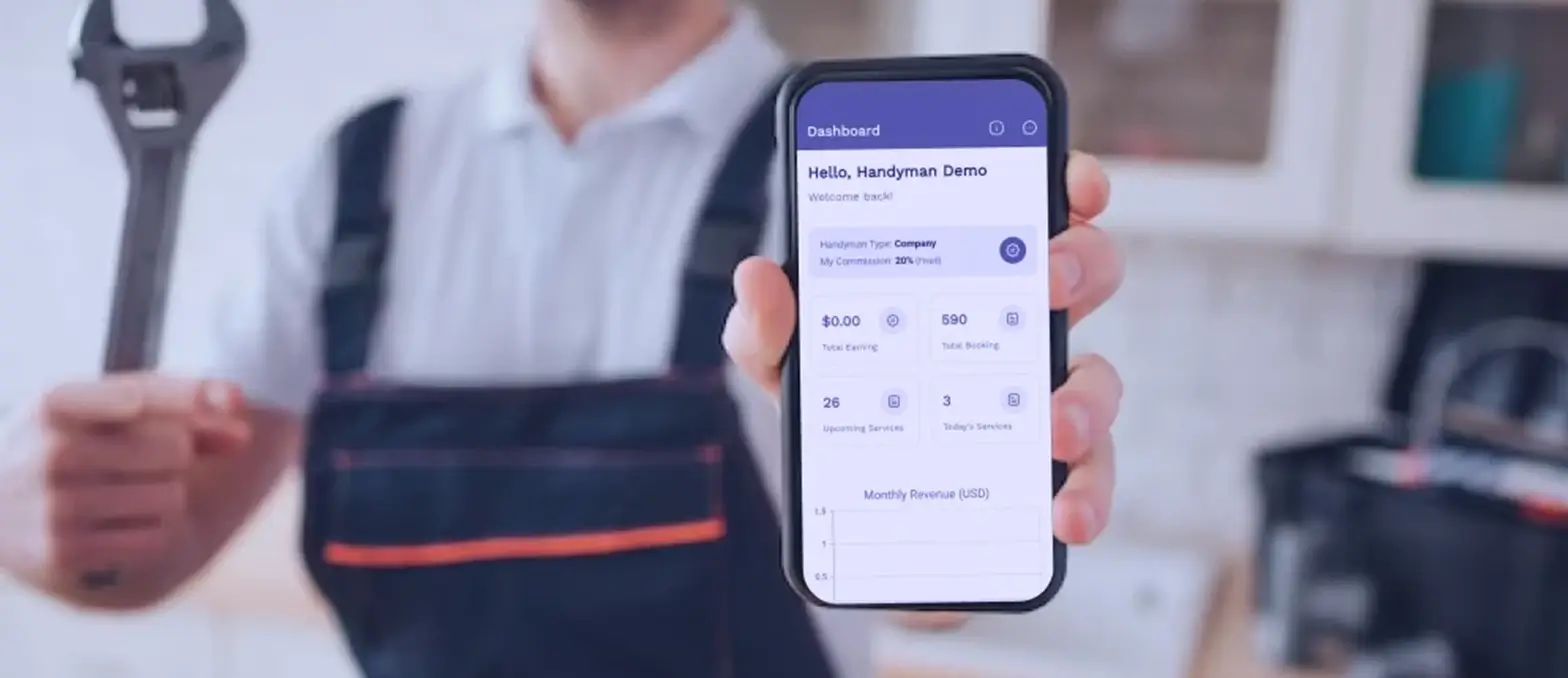

Shopify

Shopify Sidekick, an AI-powered application that makes use of Llama 2, is one example of how the company has used publicly available large language models. However this program helps small business owners automate chores associated with website management for their online stores. It helps merchants save time and streamline their operations by producing marketing copy, product descriptions, and customer service responses.

LyRise

Open source large language models are used by LyRise, a talent-matching firm based in the United States, in their chatbot, which is based on Llama and functions similarly to a human recruiter. This chatbot, which draws from a pool of excellent profiles in Africa across numerous industries, helps companies locate and hire top AI and data talent.

Niantic

The company behind Pokémon Go, Niantic, has added open-source large language models to the game with Peridot, a recent addition. This feature also makes character interactions more dynamic and context-aware by using Llama 2 to create environment-specific reactions and animations for the pet characters.

Perplexity

This is how Perplexity an generative AI development company uses LLMs that are open-source.

Process of creating a response:

Perplexity’s engine goes through about six processes in order to provide a response when a user asks a question. However several large language models are used in this process, which demonstrates the company’s dedication to providing thorough and precise responses.

At a critical stage of response preparation, the very last one, Perplexity uses its own custom-built open-source large language models. The designing of the models to provide a concise summary of content that is pertinent to the user’s query. They are improvements on pre-existing frameworks such as Mistral and Llama.

Also, AWS Bedrock is used for the fine-tuning of these models, with an emphasis on the use of open models for increased control and customization. This tactic highlights Perplexity’s commitment to improving their technology in order to achieve better results.

Partnership and API integration.

Additionally in an effort to broaden its technical reach, Perplexity and Rabbit have partnered to integrate Rabbit’s open-source large language models into the R1, a small artificial intelligence device. This partnership, made possible by an API, expands the use of Perplexity’s cutting-edge models and represents a major advancement in the application of AI in real-world settings.

The CyberAgent

Through its OpenCALM initiative, a configurable Japanese language model, CyberAgent, a Japanese digital advertising company, enhances its AI-driven advertising services, such as Kiwami Prediction AI, by utilizing open source large language model. Through the use of an open-source methodology, CyberAgent hopes to promote joint AI development, acquire outside perspectives, and accelerate AI developments in Japan. Additionally, a collaboration with Dell Technologies has improved their GPU and server capabilities, resulting in a 5.14x increase in model performance. This streamlines service upgrades and improvements for increased effectiveness and economy.

Be a Part of Our Open Source LLM Revolution

Conclusion

When it comes to NLP vs. LLM, natural language processing has seen a revolution. All thanks to large language models (LLMs) they have made it possible to achieve new heights in text generation and comprehension. Big data can teach LLMs to comprehend its context and entities, learn from it, and respond to user inquiries. They are therefore a fantastic substitute for frequent use in a variety of jobs across numerous sectors. Nonetheless, there are issues with these models’ possible biases and ethical ramifications. It’s vital for AI solution providers to examine LLMs critically and consider how they affect society. LLMs have the potential to improve many areas of life with, but we must be conscious of limitations and ethical ramifications of LLMs.

FAQs

Which large language models are the best?

The leading large language models are RoBERTa, GPT-3, BERT, T5, and GPT-2. These models can translate languages, summarize texts, and respond to questions. They can also generate very realistic and coherent text for a variety of natural language processing applications.

What are the uses of LLMs?

Uses of LLM incudes:

- – producing writing that resembles that of a human,

- – handle a variety of natural language processing jobs, and

- – have the potential to transform numerous sectors

- – can aid with content production, boost search engine rankings,

- – increase the accuracy of language translation, and improve the functionality of virtual assistants.

Large language models are also useful for scientific studies, like the analysis of massive amounts of textual data in sociological, medical, and linguistic domains.

How do NLP and LLM vary from one another?

A branch of artificial intelligence called natural language processing, or NLP, focuses on deciphering and interpreting human language. In contrast, large-scale, text-generating LLMs are specialized models employed in NLP that perform exceptionally well in language-related tasks.

What are LLMs in AI?

Language models in artificial intelligence are models created with NLP techniques. They are able to comprehend and produce content that is similar to that of a human.