ChatGPT has gained popularity as a household brand in just over a year. The algorithms that drive this popular AI tool have actually been used to power various apps and services. In order to grasp the functioning of ChatGPT, it is important to delve into the language engine that serves as its foundation. The acronym GPT stands for Generative Pre-trained Transformer, with the number indicating the specific version of the algorithm. Actually, most of the AI text generators currently in use rely on GPT-3, GPT-3.5, and GPT-4. However, they typically utilize each version discreetly. So let’s have a detailed discussion about what is Chagpt, and how it works, and answer all your questions related to Chagpt prompt engineering.

ChatGPT has propelled GPT into the limelight by providing a user-friendly and, above all, free platform for interacting with an AI text generator. It gave birth to a new realm, “prompt engineering services”. The chatbot is also quite popular, just like SmarterChild was. Currently, GPT-3.5 and GPT-4 are the most widely used large language models (LLMs). However, it is expected that there will be a significant increase in competition in the upcoming years.

For instance, Google has developed Bard (now Gemini), an AI chatbot powered by its own language engine called Pathways Language Model (PaLM 2). The latest LLM, called Llama 2, has been released by Meta, the parent company of Facebook. Additionally, there are options available for corporate entities, such as Writer’s Palmyra LLMs and Anthropic’s Claude. Currently, OpenAI’s product is widely regarded as the industry standard. It is a straightforward tool that anyone can easily access.

Table of Contents

We help you craft effective prompts for using ChatGPT in your business

What is ChatGPT?

The ChatGPT app was developed by OpenAI. The GPT language models are capable of various tasks such as answering questions, composing text, generating emails, engaging in conversations, explaining code in different programming languages, translating natural language to code, and more. However, it’s important to note that the success of these tasks depends on the natural language cues you provide. This chatbot is truly exceptional.

Although it can be enjoyable to explore creating a Shakespearean sonnet about your cat or brainstorming subject line ideas for your marketing emails, it is also advantageous for OpenAI. This method allows for gathering a significant amount of data from real individuals, while also serving as an impressive showcase of GPT’s capabilities. Without a deep understanding of machine learning, the power of GPT might seem unclear. Due to the collection of data, ChatGPT faced restrictions in Italy in early 2023. However, the concerns raised by Italian regulators have been resolved since then.

Currently, ChatGPT provides users with access to two GPT models. GPT-3.5, the default option, is available to everyone for free, although it is slightly less powerful. Only ChatGPT Plus users have access to the more advanced GPT-4, but even they have a daily limit on the number of questions they can ask. Currently, 25 messages are being sent every three hours, although this frequency might vary in the future.

One of the standout features of ChatGPT is its ability to retain the ongoing conversation you’re having with it. This feature allows the model to understand the context of previous questions you have asked and utilize that information to have more meaningful conversations with you. Additionally, you have the option to request reworks and revisions, which will be based on the previous discussion. The AI’s interaction feels more like a genuine conversation.

How Does it work?

ChatGPT operates by analyzing your input and generating responses that it predicts will be most suitable, drawing from the knowledge it acquired during training. Although it may seem like a simple statement, it reveals the complexity of what is happening behind the scenes. It leverages several key AI concepts for its functionality, including artificial intelligence statistics, let’s look at them in detail.

-

Pre-training the Model:

ChatGPT begins with a pre-training phase where the model is trained on a massive dataset containing parts of the Internet. During this phase, the model learns the patterns, structures, and relationships within the data.

-

Architecture:

ChatGPT utilizes a transformer architecture. This architecture is known for its attention mechanisms. Allowing the model to capture long-range dependencies and relationships in the data.

-

Tokenization:

The input text is tokenized into smaller units, typically words or subwords. Each token is assigned a numerical value. Hence creating a sequence of tokens that the model can process.

-

Positional Encoding:

To provide information about the position of tokens in a sequence, positional encoding is added. This helps the model understand the order of words in a sentence.

-

Model Layers:

The model consists of multiple layers of self-attention mechanisms. Each layer refines the understanding of the input by considering the context of each token about others.

-

Training Objectives:

During pre-training, the model is trained to predict the next word in a sequence based on the context of preceding words. This unsupervised learning process helps the model learn grammar, semantics, and world knowledge.

-

Parameter Fine-Tuning:

After pre-training, the model is fine-tuned on specific tasks or domains using supervised learning. This process involves training the model on labeled datasets for tasks like language translation, summarization, or question-answering.

-

Prompt Handling:

In the context of ChatGPT, users provide prompts or input messages to generate responses. The model processes these prompts and generates output sequences based on its learned patterns and context. People call GPT 3 prompt engineering a skill they require in their resume.

-

Sampling and Output Generation:

The model generates responses using sampling techniques. One common method is temperature-based sampling, where higher temperatures make the output more random, and lower temperatures make it more deterministic.

-

Post-processing:

The generated output is post-processed to convert numerical tokens back into human-readable text. The post-processing step ensures that the final output is coherent and formatted correctly.

-

User Interaction Loop:

The process is iterative as users provide feedback and the model refines its responses. Fine-tuning based on user feedback is crucial for improving the model’s performance in generating contextually relevant and coherent responses.

-

Deployment:

ChatGPT, once trained and fine-tuned, can be deployed for various applications, such as chatbots, content generation, and more, where it can engage in conversations and provide useful outputs.

What is a prompt?

In ChatGPT, a prompt refers to an input provided to it to generate a response or output. Users can interact with and give instructions to ChatGPT using this feature. For example, when using GPT-3, you would input a prompt in the form of a text string or a set of instructions. The model then generates a response or completion based on that prompt.

The specific prompt may differ based on the task at hand. For instance, when utilizing a language model for language translation, you would provide a sentence in one language that you desire to have translated into another language. When it comes to generating text, the prompt can take various forms such as the opening of a story, a question, or any other input that you wish the model to expand upon.

It is worth mentioning that the quality and relevance of the generated output often rely on how specific and clear the prompt is. Crafting well-thought-out prompts is essential to ensure that a generative AI model produces the desired outcomes. Researchers and AI prompt engineers engage in prompt engineering chatbot services in order to gain insights into the model’s responses and refine its behavior to suit specific applications.

Ready to engineer optimized prompts to get value outcomes?

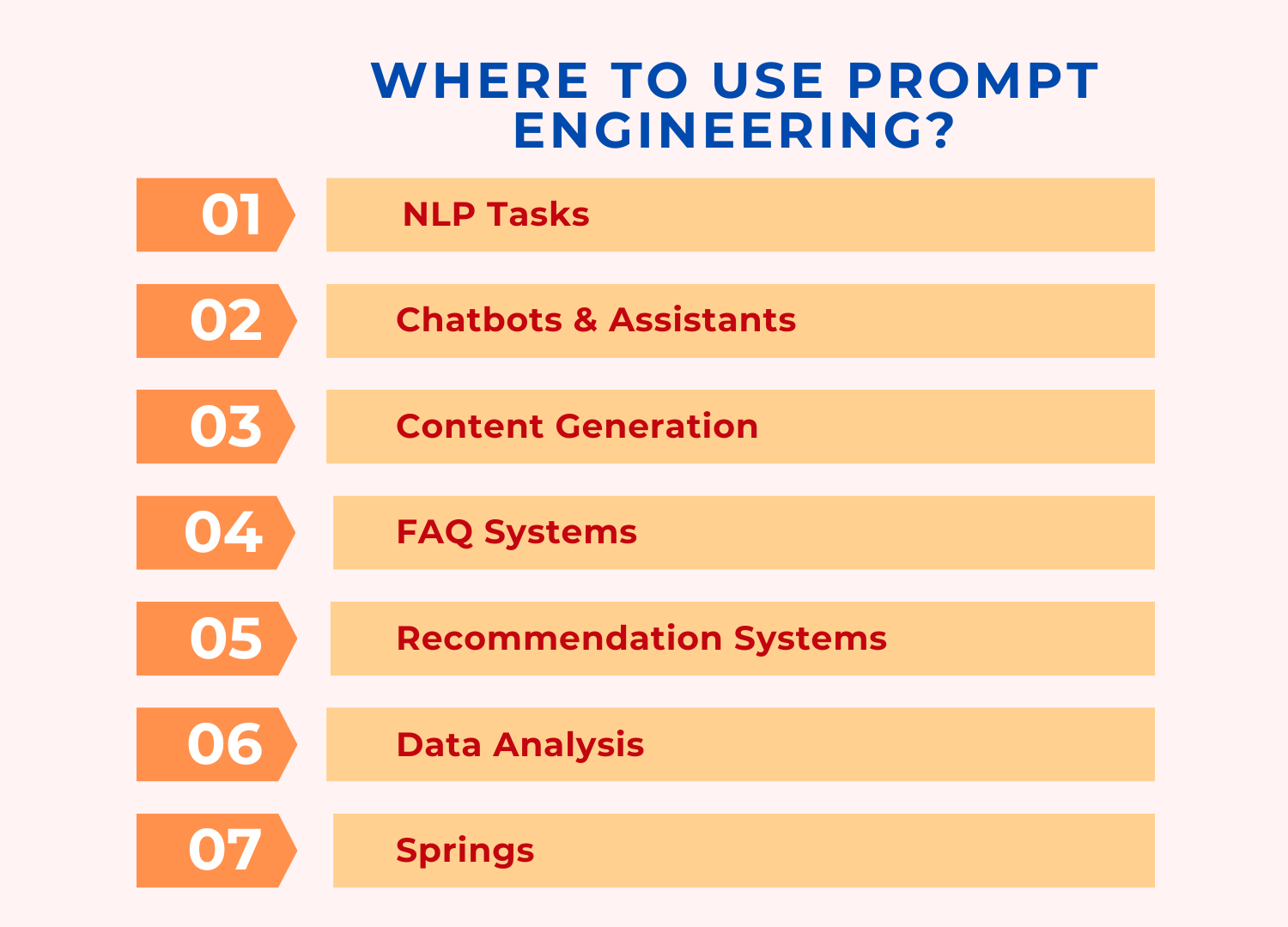

What is Prompt Engineering?

Imagine you have a smart robot that understands and responds to your commands. Now, think of a “prompt” as the way you talk to this robot, the instructions or questions you give it.

Prompt engineering is like figuring out the best way to talk to this robot to get the answers or actions you want. It involves experimenting with different ways of asking or instructing so that the robot understands you better. In generative AI (like text generation models), a prompt is a piece of text you give to the model to get it to generate more text. Prompt engineering is about tweaking, refining, and choosing the right words in your prompt to get the AI model to produce the desired output. You can learn to chatGPT prompt engineering easily, it’s not a difficult skill to master.

For example, if you’re using an AI to write a poem, your prompt might be something like “Write a poem about a sunset.” But if the model isn’t quite getting the poetic vibe right, you might experiment with different prompts like “Compose a beautiful poem describing the colors of the sky as the sun sets.” This tweaking of prompts to get better results is prompt engineering.

In essence, prompt engineering is about being a bit like a language detective, figuring out the best way to phrase your request so the AI understands and responds in a way that makes sense or is most useful to you. It’s an important skill when working with generative AI systems to get the outcomes you’re looking for.

How to write an effective prompt?

When it comes to creating AI prompts, it is crucial to approach the task with careful consideration. This is necessary to ensure that the output generated by the AI aligns with the intended outcome. If a user chooses to use AI tools like ChatGPT, Google Bard, Open AI’s DALL-E 2, or Stable Diffusion for text-to-text or text-to-image tasks, it is crucial to learn how to ask the right questions to achieve the desired results.

Here are some helpful tips for crafting effective AI prompts as told by professional AI prompt engineers::

-

Please identify the goal

For writing prompts, it is crucial for users to first determine the purpose of the prompt and the desired outcome they are aiming for. For instance, if the user desires, the AI prompt could be used to generate a blog post that is less than 1,000 words in length. Alternatively, the user may request an AI-generated image depicting a cat with vibrant green eyes and a luxuriously thick coat of fur.

-

Please provide specific details and include relevant context in your response.

When creating an AI prompt, it’s important to provide clear instructions that are specific and focused. Instead of using vague language, try to include precise details about the traits you want the AI model to generate. This can include features, shapes, colors, textures, patterns, or even aesthetic styles. By being more specific, you’ll help the AI model better understand your requirements and produce more accurate results. To achieve the best outcome, it would be beneficial to incorporate relevant background and contextual details. For example, instead of simply asking to “Create landscape,” it would be more beneficial to provide specific details. For instance, you could request a serene landscape featuring a snow-capped mountain in the background, a calm lake in the foreground, and a setting sun casting warm hues across the sky.

-

Please incorporate the specified keywords or phrases into the text.

Using keywords and phrases can help optimize your website for search engines. It also makes it easier to convey your preferred terms.

-

Please ensure that the prompts are concise and free from unnecessary details.

The length of a prompt can vary depending on the AI platform being used. While it is true that longer and more intricate prompts can offer the AI additional visual cues to generate the desired response, the optimal prompt should consist of three to seven words.

-

Try to use terms that do not contradict each other

To prevent confusion in the AI model, it is crucial to steer clear of using conflicting terms. An instance where the use of both “abstract” and “realistic” in a prompt could potentially lead to confusion for the AI generator, resulting in undesired output.

-

Ask Open-ended questions

Open-ended questions typically generate more extensive responses compared to questions that can be answered with a simple “yes” or “no.” Instead of asking, “Is coffee detrimental to your health?” Could the AI prompt inquire about the potential advantages and disadvantages of consuming coffee for one’s health?

-

I recommend utilizing AI tools for your task

There are various platforms and AI tools that you can use to generate prompts and create top-notch AI-generated content. Users can customize and generate prompts on various websites, such as ChatGPT, DALL-E, and Midjourney.

Fine Tune Your prompts- Engage With The Leading Prompt Engineering Company

Why is Prompt Engineering so crucial in today’s scenario?

In many job descriptions, you may not find a specific career title for engineering, particularly when it comes to working with generative AI models. However, the skills required for prompt engineering are extremely valuable in different positions within the wider field of artificial intelligence and machine learning. I’d like to share my perspective on why having strong engineering skills can be incredibly important for a successful career in AI.

-

AI Research and Development:

Researchers and developers working on AI projects often need to fine-tune and optimize models for specific tasks. Skills in prompt engineering are essential for tailoring pre-trained models to achieve desired outcomes in areas like natural language processing, image generation, or code generation.

-

Data Science and Analytics:

In data science roles, professionals may leverage generative AI tools for various tasks, such as data synthesis, text generation, or predictive modeling. Effective prompt engineering can enhance the performance and relevance of these models for specific business objectives.

-

Conversational AI and Chatbot Development:

With the increasing use of chatbots and conversational AI, individuals skilled in prompt engineering are valuable. Crafting prompts that lead to coherent and contextually appropriate responses is critical in developing user-friendly and effective conversational agents.

-

Content Generation and Marketing:

In content creation and marketing, AI is often employed for generating written content, ad copies, or social media posts. Professionals in these fields benefit from prompt engineering skills to ensure that the AI-generated content aligns with brand voice and objectives.

-

AI Consulting and Solutions Architecture:

Professionals involved in AI consulting or solutions architecture may need to advise clients on the implementation of generative AI models. Understanding how to formulate effective prompts is crucial for aligning AI solutions with client needs.

-

Ethical AI and Bias Mitigation:

As concerns about bias and ethical considerations in AI grow, there is an increasing demand for professionals who can guide and influence the behavior of AI models through prompt engineering. This is particularly relevant for roles which focus on AI ethics and fairness.

-

Startups and Innovation Hubs:

In startup environments and innovation hubs, where novel AI applications are developed, individuals with expertise in prompt engineering can contribute significantly to the design and optimization of AI-powered products and services.

-

Continuous Learning and Adaptation:

The field of AI is dynamic, with models constantly evolving. Professionals who can quickly adapt to changes in model behavior and effectively engineer prompts for new versions of models are valuable assets to their teams.

How to become a Chatgpt prompt engineer?

Becoming a prompt engineer for models like ChatGPT involves a combination of skills in natural language understanding, and experimentation. Along with a good understanding of the specific model you’re working with. Here’s a step-by-step guide on how to become a ChatGPT prompt engineer:

-

Understand the Model:

Familiarize yourself with the ChatGPT model, its capabilities, and limitations. Understand the types of tasks it excels at and those where it may struggle. This can involve reading model documentation and relevant research papers.

-

Learn Natural Language Processing (NLP) Basics:

Develop a foundational understanding of natural language processing, including concepts such as tokenization, language modeling, and neural networks. Online courses, tutorials, and textbooks in NLP solutions can be valuable resources.

-

Practice with ChatGPT:

Spend time experimenting with ChatGPT. Use various prompts to understand how the model responds to different inputs. This hands-on experience will help you grasp the nuances of interacting with the model.

-

Experiment with Prompts:

Practice prompt engineering by experimenting with different prompts and observing the model’s responses. Understand how changes in wording, context, or specificity impact the output. This iterative process is crucial for refining your prompt engineering skills.

-

Explore Use Cases:

Explore a variety of use cases for ChatGPT, such as content creation, answering questions, or generating code snippets. Understanding the diverse applications will help you tailor prompts for specific tasks.

-

Stay Updated:

Stay informed about updates and improvements to ChatGPT or similar models. Models evolve, and being aware of changes will help you adapt your prompt engineering strategies accordingly.

-

Develop Domain Expertise:

If your tasks involve specific domains, such as technology, finance, or healthcare, develop domain expertise. This will enable you to craft prompts that align with the language and requirements of those domains.

-

Understand Ethical Considerations:

Be mindful of ethical considerations in AI, especially bias and fairness. As a prompt engineer, it’s important to consider the potential impact of your prompts on the generated content.

-

Explore Tools and Frameworks:

Familiarize yourself with tools and frameworks that assist in prompt engineering. Some platforms offer templates or libraries to streamline the process. Understand how to leverage these tools effectively.

-

Build a Portfolio:

Create a portfolio showcasing your prompt engineering projects. This could include before-and-after examples, demonstrating how well-crafted prompts lead to desired outcomes. Share your portfolio on platforms like GitHub or personal websites.

-

Networking and Collaboration:

Engage with the AI and NLP communities. Attend conferences, participate in online forums, and collaborate with others working in similar domains. Networking can provide valuable insights and opportunities for collaboration.

-

Continuous Learning:

The field of AI is dynamic, with new models and techniques emerging regularly. Stay curious and committed to continuous learning. Follow the latest research papers, attend webinars, and enroll in relevant courses to stay updated.

Conclusion

So finally to wrap up, let’s just recap what we have learned till now. If we describe the prompt engineering in one single phrase, then there is no better phrase than “Communication is the key.” We discussed what is Chatgpt, and what are pillars it stands upon. Remember it’s not just about instructing a machine; it’s about crafting conversations that lead to meaningful and desired outcomes. Through hands-on experimentation, continuous learning, and a dash of creativity, you can become proficient in the art of prompting ChatGPT. With the gaining popularity of these generative AI solutions, prompt engineering has emerged as a career these days.

Get Expert Engineering Solutions Tailored To Your Business Needs

FAQs

Q1: What exactly is ChatGPT prompt engineering?

Prompt engineering is the art of crafting input messages or queries, known as prompts, to effectively communicate with ChatGPT. It involves experimenting with different prompts to guide the model and achieve desired responses or outputs.

Q2: Why is prompt engineering important for ChatGPT?

Effective prompt engineering is crucial because it directly influences the quality of ChatGPT’s responses. Crafting well-thought-out prompts ensures that the model understands your intentions, leading to more accurate and contextually relevant outputs.

Q3: Do I need a background in AI to master ChatGPT prompt engineering?

While a background in AI can be helpful, it’s not mandatory. Anyone with an interest in language and communication can learn and master prompt engineering through hands-on practice, experimentation, and continuous learning.

Q4: How can I showcase my prompt engineering skills?

Building a portfolio is a great way to showcase your prompt engineering skills. Share examples of before-and-after prompts, demonstrating how your thoughtful inputs lead to improved and desirable model outputs. Platforms like GitHub or personal blogs are excellent for displaying your work and expertise in prompt engineering.