Table of Contents

What is an AI Model?

An AI model happens to be a program or system which is trained to carry out tasks that normally call for human intelligence. AI model development generally includes spotting objects in pictures, learning how to speak human language, taking actions based on information, or forecasting future events. The model learns patterns from data and uses this learning to produce results.

The learning process is driven by algorithms, which help the AI model adjust its internal rules to improve its performance. Once given enough training, the model can make use of its skills to handle information that is totally new.

> Importance of AI Models in the Real World

AI models are no longer just experimental tools—they’re actively shaping how industries operate, decisions are made, and services are delivered. The impact of AI model development in the actual world is spread across a big spectrum and has been growing at a fast pace. Here’s how they’re making a difference across sectors:

- Automates Repetitive Tasks: AI models help automate routine processes such as data entry, sorting emails, or managing schedules, reducing human workload.

- Improves Decision Making: AI can go through a lot of information quickly and provide insights, helping businesses make better decisions.

- Enhances Customer Experience: AI model development act as a foundation for chatbots, suggestion algorithms, and voice assistants, offering custom responses.

- Enables Predictive Analysis: Industries use AI models to predict outcomes, such as equipment failure, customer churn, or future sales trends.

- Supports Diagnosis: AI can go through medical scans, spotting ilnesses early, and recommending treatment plans to make patient care better.

- Boosts Efficiency in Manufacturing: AI can streamline production by spotting defects in products, and cutting downtime via smart maintenance.

- Strengthens Financial Services: AI models can also identify suspicious or fraudulent transactions, calculate credit risk, and create smart trading strategies.

- Improves Supply Chain Management: AI can easily forecast demand levels, streamline inventory, and figure out efficient delivery routes.

- Helps in Natural Disaster Management: AI model development processes satellite data to predict floods, earthquakes, or wildfires, enabling quicker response.

- Assists in Education: Personalized learning platforms use AI to adapt content based on student performance and learning speed.

- Empowers Smart Cities: AI controls traffic systems, energy usage, and waste management for improved urban living.

- Supports Environmental Monitoring: AI analyzes climate data, tracks pollution levels, and helps in wildlife conservation.

- Drives Innovation: From autonomous vehicles to language translation, you’re always going to find an AI development company at the core of modern technological advancement.

Types of AI Models

Developing AI models of various types is based on your knowledge about how they pick up knowledge or skills and the kind of tasks you’re planning to get done. Having a solid understanding of AI types is crucial, particularly when trying to figure out which one’s the best for which type of tasks.

> Based on the Learning Type

1. Supervised Learning

In supervised learning, the model is trained with the help of data which is labeled. This means that each input in the training module comes ready with a relevant correct output. The model can learn to weigh inputs to output ratios by analyzing the patterns in the data. Once you create AI models, it can give out predictions on new and unseen data.

- Use case: Mail spam detection, in which emails are flagged as “spam” or “not spam,” and the model picks up the art of its classification.

2. Unsupervised Learning

Unsupervised learning involves training a model on data without any labels. The goal is to find out hidden patterns in the information. It is super-useful when it’s pricy or time-taking to categorize large datasets.

- Use case: Customer segmentation in marketing, where the model groups customers with similar behavior without any predefined categories.

3. Semi-Supervised Learning

This happens to be a combination of supervised and unsupervised learning. When you create AI models A small portion of the information is labeled, while the rest of it stays unlabeled. The AI model picks up the labeled data to learn about the data structure and applies that information for labelling or forecasting the unlabeled part.

- Use case: Categorization of medical images – when doctors are able to label only some of the scans, the AI model take charge and help by taking care of the unlabeled scans (by using the patterns and information it picked up from the labelled ones)

4. Reinforcement Learning

Reinforcement learning is when you’re developing AI models that are trained via interaction that happens with or within a specific environment that’s given to them. The model performs actions and receives feedback in the form of rewards or penalties. Over time, it learns the best strategies to maximize rewards.

- Use case: Training AI bots to play complex games like chess, where the model can achieve finesse for its skills via trial and error basis.

> Based on the Task Type

1. Classification

Classification models are used when the goal is to assign inputs to predefined categories. These models help in branching out class labels, which makes AI model development perfect for decision-making tasks.

Example: Deciding whether or not a certain mail received is spam, or figuring out if a tumour is malignant or benign.

2. Regression

Regression models predict continuous numerical values instead of categories. These models work the best when the outcome happens to be a mathematical figure.

Example: AI model development helps in analysing features such as location, size, and age of a house and coming up with a possible price for it.

3. Clustering

Clustering is used to group data points based on their similarities. In contrast to classification, clustering doesn’t seem to depend on information that is labelled. It is ideal for cases where in-depth data analysis is required.

Example: Creating unique customer pools depending on how they purchase certain items or based on their demographics.

4. Anomaly Detection

These models are known to catch patterns in the data that are clearly different from the remainder of the dataset. They help in identifying patterns in the AI model development process that may highlight things that are problematic in nature.

Example: Detecting fraudulent credit card transactions or identifying system failures.

5. Recommendation Systems

Recommendation models help in the analysis of human behavioral patterns for shopping and suggest ideal products or services. They are critical when it comes to helping out a user in figuring out what to buy next.

Example: Movie recommendations on streaming platforms based on viewing history.

Difference between AI, ML, and Deep learning model

| AI Model | ML Model | Deep Learning Model |

| AI (Artificial Intelligence) is the broadest concept that enables machines to mimic human intelligence. | ML (Machine Learning) is a subset of AI focused on algorithms that learn from data. | Deep Learning is a subset of ML that uses neural networks with many layers to learn from large amounts of data. |

| Can include rule-based systems, logic, decision trees, etc. | Uses statistical methods to improve over time with experience. | Uses artificial neural networks to automatically extract complex patterns. |

| Works even without learning from data (e.g., expert systems). | Requires structured data to learn and make predictions. | Can learn from unstructured data like images, videos, and text. |

| Less data-dependent compared to ML and DL. | Needs a decent amount of data to perform well. | Requires large volumes of data and powerful hardware (GPUs). |

| Examples: Chatbots, game AI, smart assistants. | Examples: Spam filters, recommendation engines. | Examples: Facial recognition, self-driving cars, language translation. |

| Focuses on reasoning, problem-solving, and decision-making. | Focuses on data-driven prediction and classification. | Focuses on learning data representations with deep networks. |

| May include ML and DL models as components. | Is a component of AI, more focused and data-driven. | A more complex and resource-intensive version of ML. |

Key Statistics About AI Models

We just can’t speak about the process of AI model development and not talk about its rapid rate of growth across a wide range of industries. That being said, let’s check out some of the key statistics that add to its importance:

- According to sources, the global market for AI is all set to cross the mark of 300 USD billion by 2025.

- More than 60% of businesses have adopted at least one form of AI in their operations.

- Natural Language Processing (NLP) and Computer Vision are the most used AI domains, especially in healthcare, retail, and manufacturing.

- ChatGPT, Google Bard, and Gemini are among the most popular AI language models used for text generation and assistance.

- TensorFlow, PyTorch, and Scikit-learn are the top three frameworks preferred by developers.

In terms of usage:

- 50% of developers prefer supervised learning methods.

- 30% use deep learning, especially in image and text-based projects.

- 20% apply reinforcement learning, especially in simulations and robotics.

These statistics speak about how a large language model development company can prove to be effective in boosting business processes.

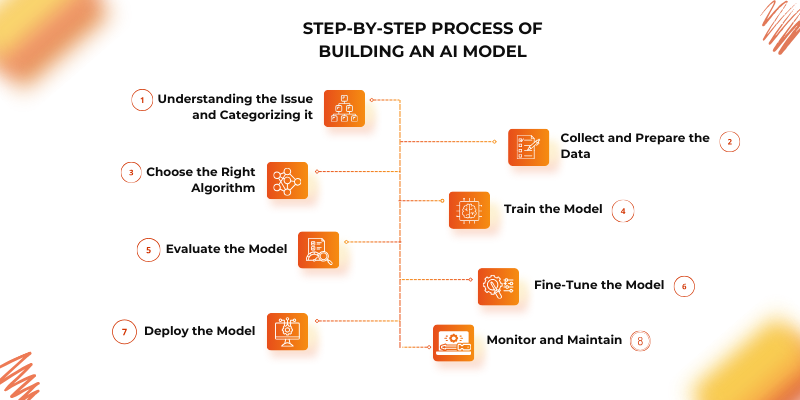

Step-by-Step Process of Building an AI Model

When you’re building an AI model, there is huge sequence of steps that tag along. Since its primary goal is to make real world problems vanish, it’s necessary that all steps are followed to ensure its efficiency. So, if you’re wondering about how to build AI models, let’s get into the details of the process and understand it from zero to one.

1. Understanding the Issue and Categorizing it

Before anything else, it’s important that you understand what exactly it is that you want the AI model to do for you or your business. Not following this step leads to a heavily misguided project that is set to fail, which makes it the first step for how to build AI models.

Ask yourself the following:

- Are you trying to predict a category (e.g., spam vs. non-spam)? → This is a classification problem.

- Are you trying to predict a number (e.g., housing price)? → This is a regression problem.

- Do you want to group items without labels? → This could be clustering.

- Is your data coming in as text, images, or structured tables?

Also, identify:

- Whether the data is structured (like rows in a database) or unstructured (like images, videos, or raw text).

- How do you want the output to be – a class label, a mathematical figure, or a list?

- Stating the problem for the process of AI model development helps you direct all the elements, like algorithms, data and performance metrics work in your favour.

2. Collect and Prepare the Data

The moment you’re done with defining the problem statement, data comes next. Data happens to be the most fundamental aspect of any AI model. The quality as well as the quantity (structured in the right way) talks volumes about how the model is going to perform.

a. Data Collection

You can gather data from various sources, depending on the problem domain:

- APIs: Useful for accessing real-time or public datasets like stock prices or weather information.

- Web Scraping: For gathering data from websites (with respect to legal and ethical guidelines).

- Internal Databases: Company records, CRM systems, or other in-house databases.

- Sensors or IoT Devices: For real-time applications like predictive maintenance or automation.

- User-Generated Content: Reviews, feedback, or survey results.

So, what makes it an important step in the guide for how to build AI models? The answer is, it ensures you collect a large enough and representative dataset for training the model.

b. Data Cleaning

Raw data is often messy and unusable in its original form. Cleaning involves:

- Handling Missing Values: Fill them using mean/mode/median or remove rows/columns if needed.

- Removing Duplicates: Avoid training the model multiple times on the same data point.

- Correcting Inconsistencies: Ensure that data entries follow a consistent format (e.g., date formats, units of measurement).

Well-cleaned data ensures the model is not misled or confused during learning.

c. Data Transformation

When developing AI models, you need to feed data into them – to be able to do that, you often need to transform it into the required format:

- Text to Tokens: Convert sentences into tokens for models to process (used in NLP).

- Normalization/Standardization: Scale numerical features so that no single feature dominates the training.

- Encoding Categorical Variables: Convert text labels into numbers using techniques like one-hot encoding or label encoding.

These transformations make the data machine-readable and consistent for the AI model development process.

d. Data Splitting

To properly train and evaluate your model, split your dataset into three parts:

- Training Set (70–80%) – The data used by the model to learn patterns.

- Validation Set (10–15%) – Used to tune the model and prevent overfitting.

- Test Set (10–15%) – Used to evaluate final model performance on unseen data.

This ensures the model is not just memorizing but genuinely learning how to generalize.

3. Choose the Right Algorithm

| Problem Type | Description | Common Algorithms |

| Classification | Assign items to predefined categories or labels. | Logistic Regression, Decision Trees, Random Forest, SVM, KNN, Naive Bayes |

| Regression | Predict continuous numerical values. | Linear Regression, Ridge Regression, Lasso, SVR, Decision Tree Regressor |

| Clustering | Group similar data points without labeled outputs. | K-Means, DBSCAN, Hierarchical Clustering, Gaussian Mixture Models |

| Dimensionality Reduction | Reduce the number of input variables while retaining important information. | PCA (Principal Component Analysis), t-SNE, LDA |

| Anomaly Detection | Identify rare or unusual data points. | Isolation Forest, One-Class SVM, Autoencoders, Local Outlier Factor |

| Recommendation | Suggest items based on user behavior or preferences. | Collaborative Filtering, Matrix Factorization, Content-Based Filtering |

| Natural Language Processing (NLP) | Understand and generate human language. | RNN, LSTM, Transformers (BERT, GPT), Naive Bayes (for text classification) |

| Image Recognition | Analyze and classify images. | Convolutional Neural Networks (CNNs), ResNet, Inception |

| Time Series Forecasting | Predict future values based on past sequential data. | ARIMA, LSTM, Prophet, Exponential Smoothing |

| Optimization | Find the best solution among many possibilities. | Genetic Algorithms, Gradient Descent, Simulated Annealing |

4. Train the Model

Step number four in the guide on how to build AI models involves training data. This is where the model starts learning the relationships between inputs and outputs.

Training typically involves:

- Gradient Descent: An optimization algorithm used to minimize the loss (error) by adjusting weights gradually.

- Backpropagation: A technique used in training neural networks where the error is propagated backward to update weights.

- Epochs and Batches: The model learns in passes (epochs) over data, and often in small groups (batches) for efficiency.

- Cross-Validation: This is a technique to validate the model on different subsets of data to ensure it performs consistently.

When you create AI models, you need to monitor metrics like loss, accuracy, or mean error during training to ensure the model is continuously learning and improving.

5. Evaluate the Model

After training, evaluate how well the model performs using the test data. The metrics you use depend on the type of problem:

For Classification Tasks:

- Accuracy: Percentage of correct predictions.

- Precision: Correct positive predictions among all predicted positives.

- Recall: How many actual positives the model identified correctly.

- F1-Score: Harmonic mean of precision and recall.

- Confusion Matrix: A summary table used by an AI model development company to show correct vs incorrect predictions by class.

For Regression Tasks:

- RMSE (Root Mean Square Error): Average prediction error.

- MAE (Mean Absolute Error): Average of absolute differences between predicted and actual values.

- R² Score: How well the model explains the variance in the target variable.

Evaluation should always be based on the test set, not training or validation sets, to avoid biased or misleading results.

6. Fine-Tune the Model

If you’re wondering whether we’ve reached the end of the step-by-step guide on how to build AI models – we haven’t. If you reach a point where you feel the evaluation results are not satisfactory, fine-tune the model for better performance. This can involve:

- Hyperparameter Tuning: Adjust settings like learning rate, depth of trees, number of neurons, batch size, etc.

- Changing Algorithms: Try different models that might be more suitable for the problem.

- Feature Engineering: Create new input features from existing ones to provide more useful information to the model.

- Ensemble Techniques: Combine multiple models (e.g., random forest, boosting) to improve accuracy.

Tuning can be manual or automated using:

- Grid Search: Try all combinations of parameters.

- Random Search: Try random combinations, which is often faster.

7. Deploy the Model

Once the model performs well, the next step in the AI model development process is deployment—making it available for use in the real world.

Deployment methods include:

- Creating a REST API: Use tools like Flask or FastAPI to serve the model via a web interface.

- Cloud Services: Deploy the model on cloud platforms like AWS (SageMaker), Google Cloud (Vertex AI), or Microsoft Azure.

- Edge Devices: In applications like autonomous cars or smart cameras, models are deployed on local devices for low-latency responses.

During deployment:

- Ensure compatibility with existing systems.

- Package necessary dependencies using tools like Docker.

- Track model versioning for updates and rollback if needed.

8. Monitor and Maintain

Deployment is not the end of the process of AI model development. AI models need constant monitoring to stay effective over time. Models can lose accuracy if the data they receive in production changes from the training data—a problem known as data drift.

Key monitoring tasks include:

- Performance Tracking: Continuously monitor prediction accuracy and response times.

- Data Drift Detection: Identify changes in input patterns that could reduce model performance.

- Model Retraining: Update the model periodically with new data to keep it relevant.

- System Health Checks: Monitor for errors, bottlenecks, or latency issues.

Maintaining a model is essential for ensuring long-term value and trust in the AI system.

Tools and Frameworks for Building AI Models

The AI development ecosystem is supported by a range of tools and frameworks that simplify model creation, training, and deployment. These AI model development tools help developers work efficiently while ensuring the models are robust and scalable.

> Programming Languages

- Python: The most popular language in AI development. It’s easy to learn, and supports a vast range of libraries for machine learning, deep learning, and data processing.

- R: Widely used in statistical analysis and data visualization, making it useful in projects that require in-depth data exploration and statistical modeling.

> Frameworks and Libraries

- TensorFlow: An open-source deep learning framework developed by Google. It’s suited for large-scale projects and production environments. It’s one of the AI model development tools that also supports model deployment on mobile and web platforms.

- PyTorch: Known for its simplicity and flexibility, PyTorch is often used in academic research and experimentation. It allows dynamic computation graphs, which are helpful for tasks that require real-time debugging.

- Scikit-learn: A comprehensive library for classical machine learning algorithms such as regression, classification, and clustering. It’s beginner-friendly and widely adopted.

- Keras: A user-friendly API built on top of TensorFlow that allows quick prototyping of deep learning models.

- XGBoost / LightGBM: High-performance libraries for gradient boosting – an AI model development tool often used in structured data problems like competitions on Kaggle.

> Development and Monitoring Tools

- Jupyter Notebooks: Provide an interactive interface for writing code, visualizing results, and documenting workflows.

- Google Colab: A cloud-based alternative to Jupyter that provides free access to GPUs and TPUs.

- MLflow / Weights & Biases: Tools for experiment tracking, model versioning, and performance monitoring across AI model development cycles.

Common Challenges in Building AI Models

Building AI models involves more than just feeding data into an algorithm. Several technical and practical challenges can affect model accuracy, reliability, and real-world usability. Here are some of the most common challenges:

1. Poor Data Quality

If the data used for training during the AI model development is inaccurate, inconsistent, or full of errors, the model will learn the wrong patterns. Issues like missing values, duplicate records, and incorrect formatting can lead to misleading outcomes and reduce model performance.

2. Lack of Enough Data

AI models require large and diverse datasets to generalize well. Limited data can lead to high variance in results or a model that cannot handle unseen scenarios. This is especially problematic in areas like image or speech recognition, where the complexity of data requires thousands of examples.

3. Overfitting and Underfitting

Overfitting occurs during AI model development when a model learns the training data too well, including its noise and outliers, and fails to perform on new data.

Underfitting happens when the model is too simple or not trained enough, and fails to capture underlying patterns in the data.

4. Bias in Data

Bias in training data can lead to discriminatory or unfair predictions. If the dataset lacks diversity or reflects historical inequalities, the AI model will replicate those issues.

5. Choosing the Wrong Model

Every machine learning problem has a suitable set of algorithms in the process of AI model development. Using a model that doesn’t match the task (e.g., using linear regression for a classification problem) can result in poor outcomes.

6. Scalability

A model that works well in development may struggle in production. Challenges include high inference time, memory usage, and inability to process real-time or large-scale data efficiently.

Best Practices for Building AI Models

Building a successful AI model goes beyond selecting an algorithm and training it on data. Applying best practices when developing AI models ensures the model is not only accurate but also usable, maintainable, and aligned with real-world needs. Below are some key practices to follow:

1. Start with a Clear Problem Statement

Before writing a single line of code, define the problem clearly. Understand whether it’s a classification, regression, clustering, or recommendation task. A well-defined problem helps guide the data requirements, algorithm selection, and evaluation metrics.

2. Use Clean, Representative Data

The quality of the dataset plays a critical role in how well the model performs. Ensure the data is free from errors, inconsistencies, and duplicates. When you create AI models, the dataset should also reflect the real-world diversity of the problem space to avoid bias and improve generalisation.

3. Split Your Dataset Correctly

Properly divide your dataset into training, validation, and testing sets. This separation helps prevent overfitting and ensures that the model is evaluated fairly on unseen data.

4. Select the Algorithm Based on Problem Type

Different problems require different types of algorithms. Use classification algorithms for categorical predictions during AI model development process, regression for numerical outcomes, and clustering for grouping data without labels.

5. Monitor for Overfitting During Training

Keep track of performance on both training and validation data. Early stopping, regularization techniques, and dropout layers (for neural networks) can help manage overfitting.

6. Document Every Step

Maintain records of experiments, parameters, and model versions. This makes debugging easier and supports reproducibility, especially when working in teams.

7. Test on Real-World Scenarios

Always evaluate the model using real-world or unseen scenarios to check for robustness and practical usability in AI model development process.

8. Update Models Periodically

Data patterns change over time. Retrain or fine-tune your models regularly to maintain accuracy and relevance.

Real-Life Examples of AI Models in Industries

AI models are being used across various industries to solve real-world problems, improve efficiency, and enhance decision-making. Here are some practical examples of how different sectors are leveraging AI:

1. Healthcare

AI is making a major impact in diagnostics and treatment planning. Deep learning models are used to analyze X-rays, MRIs, and CT scans to detect diseases such as cancer, pneumonia, and brain tumors with high accuracy. Natural language processing (NLP) helps extract insights from electronic health records, while predictive models assist in patient risk assessment and treatment recommendations.

2. Finance

Financial institutions use AI model development process to monitor and analyze transactions in real-time to detect fraudulent activity. Machine learning models flag unusual spending patterns or login behaviors, helping to prevent unauthorized access and financial losses. AI is also used in credit scoring, automated customer service through chatbots, and investment portfolio optimization.

3. Retail

AI-powered recommendation systems track user behavior and purchase history to suggest relevant products. For example, e-commerce platforms like Amazon use collaborative filtering and content-based filtering models to personalize shopping experiences. AI also supports inventory management, demand forecasting, and dynamic pricing strategies.

4. Manufacturing

Predictive maintenance is a key AI application in manufacturing. Machine learning models analyze sensor data from equipment to predict failures before they occur, reducing downtime and maintenance costs. AI model development process is also used in quality control through image analysis, detecting defects in products on the assembly line.

5. Transportation

AI models enable self-driving vehicles to navigate roads safely. These models process data from cameras, LIDAR, and sensors to identify lanes, road signs, pedestrians, and other vehicles. AI is also used in route optimization, traffic prediction, and driver behavior analysis for fleet management.

Conclusion

Building an AI model happens to be a carefully laid plan that includes defining a problem, collecting information, training the model, and finally – its evaluation. It’s important for a developer to understand the problem clearly and pick the best set of tools for it. By doing so, developers can create AI models that solve real-world issues in the best way there is. Moreover, monitoring the models and fine-tuning on a routine basis are also important to ensure the model remains relevant with time, as data patterns and external conditions change.

AI models are reshaping industries all over the world, from healthcare to finance, by automating tasks, and reinventing customer experiences. Despite the challenges involved, such as data quality issues and model bias, following best practices ensures the development of robust, reliable AI systems that can drive innovation and improve efficiency in numerous sectors.